PDF] Near-Synonym Choice using a 5-gram Language Model

Por um escritor misterioso

Last updated 09 julho 2024

![PDF] Near-Synonym Choice using a 5-gram Language Model](https://d3i71xaburhd42.cloudfront.net/981fec6d9d4b45c2f0f4d80512fe0cf56419b8b1/10-Table3-1.png)

An unsupervised statistical method for automatic choice of near-synonyms is presented and compared to the stateof-the-art and it is shown that this method outperforms two previous methods on the same task. In this work, an unsupervised statistical method for automatic choice of near-synonyms is presented and compared to the stateof-the-art. We use a 5-gram language model built from the Google Web 1T data set. The proposed method works automatically, does not require any human-annotated knowledge resources (e.g., ontologies) and can be applied to different languages. Our evaluation experiments show that this method outperforms two previous methods on the same task. We also show that our proposed unsupervised method is comparable to a supervised method on the same task. This work is applicable to an intelligent thesaurus, machine translation, and natural language generation.

![PDF] Near-Synonym Choice using a 5-gram Language Model](https://media.post.rvohealth.io/wp-content/uploads/sites/3/2022/07/what_to_know_apples_green_red_732x549_thumb-732x549.jpg)

Apples: Benefits, nutrition, and tips

![PDF] Near-Synonym Choice using a 5-gram Language Model](https://c5.rgstatic.net/m/4671872220764/images/template/default/profile/profile_default_m.jpg)

Near-synonym choice using a 5-gram language model

![PDF] Near-Synonym Choice using a 5-gram Language Model](https://bs-uploads.toptal.io/blackfish-uploads/components/blog_post_page/content/cover_image_file/cover_image/1309974/retina_1708x683_cover-0828_AfterAllTheseYearstheWorldisStillPoweredbyCProgramming_Razvan_Newsletter-2b9ea38294bb08c5aea1f0c1cb06732f.png)

Why the C Programming Language Still Runs the World

![PDF] Near-Synonym Choice using a 5-gram Language Model](https://blog.hubspot.com/hs-fs/hubfs/color-scheme.jpg?width=595&height=400&name=color-scheme.jpg)

Color Theory 101: A Complete Guide to Color Wheels & Color Schemes

![PDF] Near-Synonym Choice using a 5-gram Language Model](https://public-images.interaction-design.org/literature/articles/heros/article_130798_hero_6284cbba0530b4.18444542.jpg)

The 5 Stages in the Design Thinking Process

![PDF] Near-Synonym Choice using a 5-gram Language Model](https://builtin.com/cdn-cgi/image/f=auto,quality=80,width=752,height=435/https://builtin.com/sites/www.builtin.com/files/styles/byline_image/public/2022-12/beginners-guide-language-models_0.jpg)

A Beginner's Guide to Language Models

![PDF] Near-Synonym Choice using a 5-gram Language Model](https://d3i71xaburhd42.cloudfront.net/981fec6d9d4b45c2f0f4d80512fe0cf56419b8b1/8-Table2-1.png)

PDF] Near-Synonym Choice using a 5-gram Language Model

![PDF] Near-Synonym Choice using a 5-gram Language Model](https://cdn.britannica.com/86/150486-050-3EBC3516/MyPlate-guidelines-food-groups-sections-section-plate-2011.jpg)

Human nutrition, Importance, Essential Nutrients, Food Groups, & Facts

![PDF] Near-Synonym Choice using a 5-gram Language Model](https://www.simplypsychology.org/wp-content/uploads/kohlberg-moral-development.jpeg)

Kohlberg's Stages of Moral Development

Recomendado para você

-

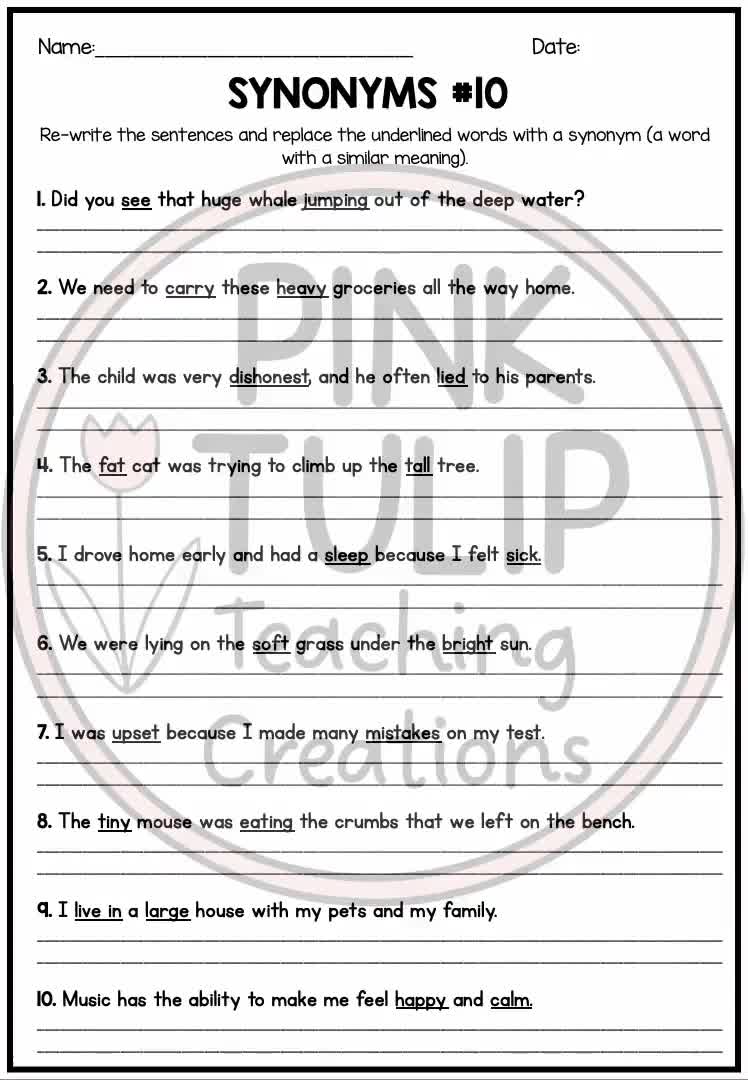

SYNONYMS: Words to use instead - Learn English Today.com09 julho 2024

-

mistake ka synonyms, synonym of mistake, mistake synonyms, similar words09 julho 2024

mistake ka synonyms, synonym of mistake, mistake synonyms, similar words09 julho 2024 -

Add A Synonym Fill in the Blanks Worksheet Pack (Download Now)09 julho 2024

Add A Synonym Fill in the Blanks Worksheet Pack (Download Now)09 julho 2024 -

synonyms picture cards09 julho 2024

synonyms picture cards09 julho 2024 -

Mistake ka synonym, Mistake synonym09 julho 2024

Mistake ka synonym, Mistake synonym09 julho 2024 -

Skill Booster Series: Synonyms09 julho 2024

Skill Booster Series: Synonyms09 julho 2024 -

EWA: Learn Languages - Who can find the synonym for the word join? Leave them in the comments 😍 JOIN THE EWA COMMUNITY TO IMPROVE YOUR LANGUAGE -->09 julho 2024

-

Test your vocabulary knowledge and tell us the synonym! #synonym09 julho 2024

-

Synonyms for Worked With To Use on Your Resume09 julho 2024

Synonyms for Worked With To Use on Your Resume09 julho 2024 -

Is Good Morning Capitalized? What About Good Afternoon?09 julho 2024

Is Good Morning Capitalized? What About Good Afternoon?09 julho 2024

você pode gostar

-

Fort Lobber -- Smash Karts 1v1 Tournament VI -- 11/17/2021 9am CT09 julho 2024

Fort Lobber -- Smash Karts 1v1 Tournament VI -- 11/17/2021 9am CT09 julho 2024 -

Fenty Beauty by Rihanna Pro Filt'r Soft Matte Longwear Foundation 1.08 oz New09 julho 2024

Fenty Beauty by Rihanna Pro Filt'r Soft Matte Longwear Foundation 1.08 oz New09 julho 2024 -

𝗕𝗿𝗼𝗹𝘆 𝗦𝘀𝗷 𝗟𝗲𝗴𝗲𝗻𝗱𝗮𝗿𝘆 in 202309 julho 2024

𝗕𝗿𝗼𝗹𝘆 𝗦𝘀𝗷 𝗟𝗲𝗴𝗲𝗻𝗱𝗮𝗿𝘆 in 202309 julho 2024 -

16 Manga Like The Promised Neverland09 julho 2024

16 Manga Like The Promised Neverland09 julho 2024 -

We also have Live Music Saturday and Sunday! - Picture of 360 UNO09 julho 2024

We also have Live Music Saturday and Sunday! - Picture of 360 UNO09 julho 2024 -

Dica de série: O Gambito da Rainha • Helena Mattos09 julho 2024

Dica de série: O Gambito da Rainha • Helena Mattos09 julho 2024 -

chess24 on the App Store09 julho 2024

chess24 on the App Store09 julho 2024 -

Subway Surfers Copa do Mundo - Jogos Online Wx09 julho 2024

Subway Surfers Copa do Mundo - Jogos Online Wx09 julho 2024 -

ДЮСШ #11 м. Дніпро09 julho 2024

-

COMO DAR PRESTIGE NO YOUR BIZARRE ADVENTURE DE FORMA COMPLETA E09 julho 2024

COMO DAR PRESTIGE NO YOUR BIZARRE ADVENTURE DE FORMA COMPLETA E09 julho 2024